Stratification learning can be considered as an unsupervised, exploratory, clustering process that infers a decomposition of data sampled from a stratified space into disjoint subsets that capture recognizable and meaningful structural information. In recent years, there have been significant efforts in computational topology that are relevant to stratification learning. In this talk, I will give an overview of the such efforts, and discuss challenges and opportunities. In particular, I will focus on stratification learning using local homology, persistent homology, sheaves, and discrete stratified Morse theory.

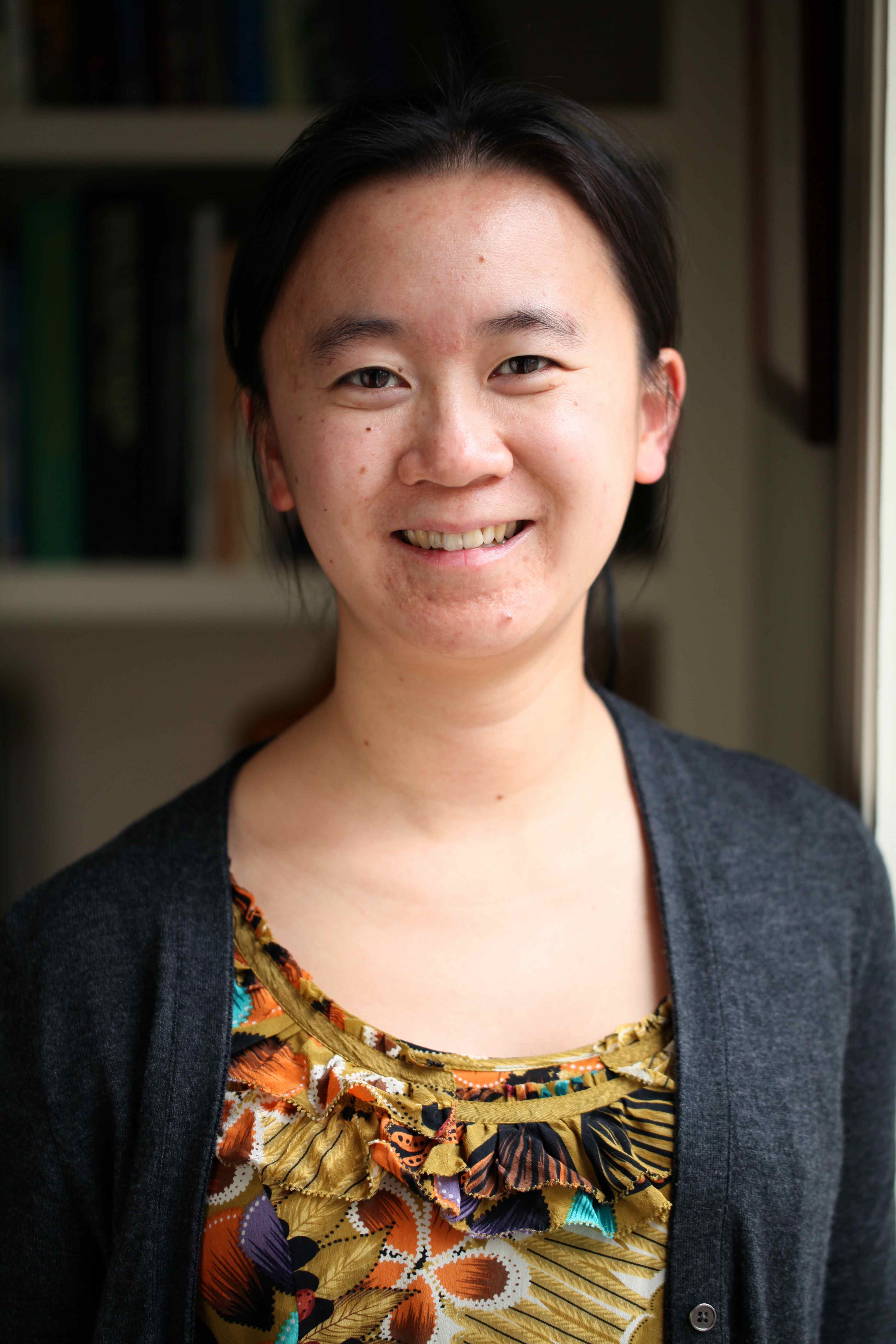

Bio: Dr. Bei Wang is an assistant professor at the School of Computing, and a faculty member at the Scientific Computing and Imaging (SCI) Institute, University of Utah. She received her Ph.D. in Computer Science from Duke University. Her research interests include topological data analysis, data visualization, machine learning and data mining.

3rd Workshop on Geometry and Machine Learning

3rd Workshop on Geometry and Machine Learning