* API for shared-memory parallel programming * standard * www.openmp.org * portable, PRAM like (CREW) * easy, but hides communication costs * easy API * C, C++, Fortran * compiler directives * runtime library routines * environment variables * nested parallelism, dynamic threads, no IO

c

#include <stdio.h>

int main(void)

{

#pragma omp parallel

printf("Hello, world.\n");

return 0;

}

c

#include <stdio.h>

#define N 1000

extern void combine(double, double);

extern double big_comp(int);

int main() {

int i;

double answer, res;

answer = 0.0;

for (i=0; i<N; ++i) {

res = big_comp(i);

combine (answer, res);

}

printf("%f\n", answer);

}

c

#include <stdio.h>

#include <omp.h>

#define N 1000

extern void combine(double, double);

extern double big_comp(int);

int main() {

int i;

double answer, res;

answer = 0.0;

for (i=0; i<N; ++i) {

res = big_comp(i);

combine (answer, res);

}

printf("%f\n", answer);

}

c

#include <stdio.h>

#include <omp.h>

#define N 1000

extern void combine(double, double);

extern double big_comp(int);

int main() {

int i;

double answer, res[N];

answer = 0.0;

#pragma omp parallel for

for (i=0; i<N; ++i) {

res[i] = big_comp(i);

}

for (i=0; i<N; ++i) {

combine (answer, res[i]);

}

printf("%f\n", answer);

}

###

#pragma omp directive [clause list]

* create teams for threads

* specify work sharing

* declare shared/private variables

* synchronization

* exclusive execution (critical regions)

#pragma omp parallel

c

#include <stdio.h>

#include <omp.h>

int main() {

int i=5; // shared variable

#pragma omp parallel

{

int c; // local/private variable to each thread

c = omp_get_thread_num();

printf("c = %d, i=%d\n"")

}

return 0;

}

bash

$ g++ -fopenmp ex1.cpp -o ex1

$ icpc -openmp ex1.cpp -o ex1

$ OMP_NUM_THREADS=2 ./ex1

#### #pragma omp parallel num_threads(3)

#### environment variable - OMP_NUM_THREADS

bash: export OMP_NUM_THREADS=8

csh: setenv OMP_NUM_THREADS=8

c

#pragma omp parallel sections num_threads(3)

{

#pragma omp section

{

task_1();

}

#pragma omp section

{

task_2();

}

#pragma omp section

{

task_3();

}

}

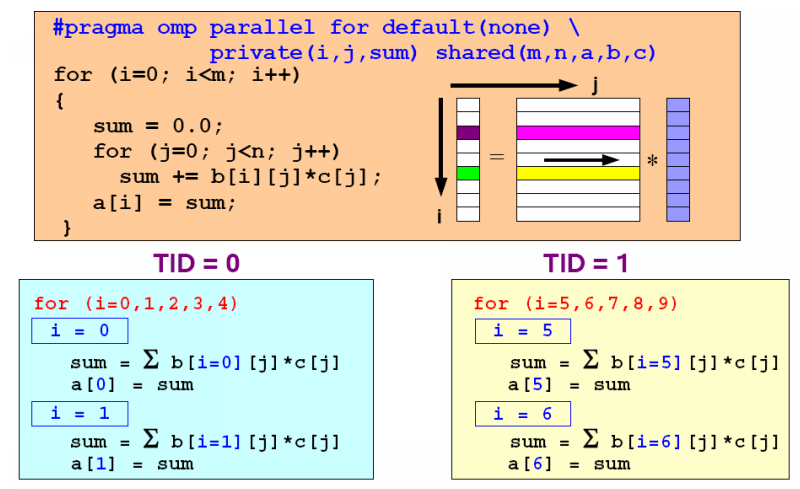

c

#pragma omp parallel for default(none) \

private (i,j,sum) shared(m,n,a,b,c)

for (i=0; i<m; ++i) {

sum = 0.0;

for (j=0; j<n; ++j)

sum += b[i][j]*c[j];

a[i] = sum;

}

c

#pragma omp parallel

{

#pragma omp single

// Only a single thread can read the input.

printf_s("read input\n");

// Multiple threads in the team compute the results.

#pragma omp for nowait

for (i = 0; i < size; i++)

b[i] = a[i] * a[i];

#pragma omp for

for (i = 0; i < size; i++)

c[i] = a[i]/2;

#pragma omp single

// Only a single thread can write the output.

printf_s("write output\n");

}

####

#pragma omp parallel for reduction(+:sum)

c

n = 1000;

result = 0.0;

#pragma omp parallel for reduction(+:result)

for (i=0; i<n; ++i)

result += a[i]*b[i];

c

#pragma omp critical(dataupdate)

{

datastructure.reorganize();

}

...

#pragma omp critical(dataupdate)

{

datastructure.reorganize_again();

}

c

omp_lock_t lck1, lck2;

int id;

omp_init_lock(&lck1);

omp_init_lock(&lck2);

#pragma omp parallel shared(lck1, lck2) private(id)

{

id = omp_get_thread_num();

omp_set_lock(&lck1);

printf("thread %d has the lock \n", id);

printf("thread %d ready to release the lock \n", id);

omp_unset_lock(&lck1);

while (! omp_test_lock(&lck2)) {

// do something useful while waiting for the lock

do_something_else(id);

}

go_for_it(id); // Thread has the lock

omp_unset_lock(&lck2);

}

omp_destroy_lock(&lck1);

omp_destroy_lock(&lck2);

c

#pragma omp parallel

{

work1();

work2();

}

#pragma omp parallel

work3();

work4();

c

void compute(int n) {

int i;

double h, x, sum;

h = 1.0/n;

sum = 0.0;

#pragma omp for reduction (+:sum) shared(h)

for (i=0; i<n; ++i) {

x = h*(i-0.5);

sum += 1/(1+x*x);

}

pi = h*sum;

}

c

int i,j;

#pragma omp parallel for

for (i=0; i<n; ++i)

for (j=0; j<m; ++j) {

a[i][j] = compute(i, j);

}

c

int a, b, i;

#pragma omp parallel for private(i,a,b)

for (i=0; i<n; ++i) {

b++;

a = b*i;

}

c = a+b;

c

int a, b=0, i;

#pragma omp parallel for private(i), firstprivate(b), \

lastprivate(a,b)

for (i=0; i<n; ++i) {

b++;

a = b*i;

}

c = a+b;